The graphical user interface revolutionized human-computer interaction because it recreated a context that our brains evolved to navigate—manipulation of objects in two- and three-dimensional space. Natural language has the same kind of connection to our evolutionary past—it’s by far the most natural medium for us to express complex needs and ideas. Language-based interfaces won’t replace graphical interfaces in all contexts, but they’ll fundamentally change the ways we communicate with computers.

If issues with LLM consistency and accuracy remain unsolved, we won’t use them in contexts where these are critical. However, we’ve already crafted a complex society from the material of other highly unreliable systems—human beings. We create guardrails that mitigate risk associated with the fact that humans are imperfect processors of information. Pedestrian crossings exist because pedestrians and drivers aren’t completely reliable. We draw lines to tell us which side of a road to drive on and use colorful lights to tell us when to stop and go. We seldom get risk to zero, but through systems design, we make it acceptable. Experiments in using LLMs will keep failing in spectacular ways, but we’ll learn where and how they can be applied, and as necessary, we’ll set guardrails around them.

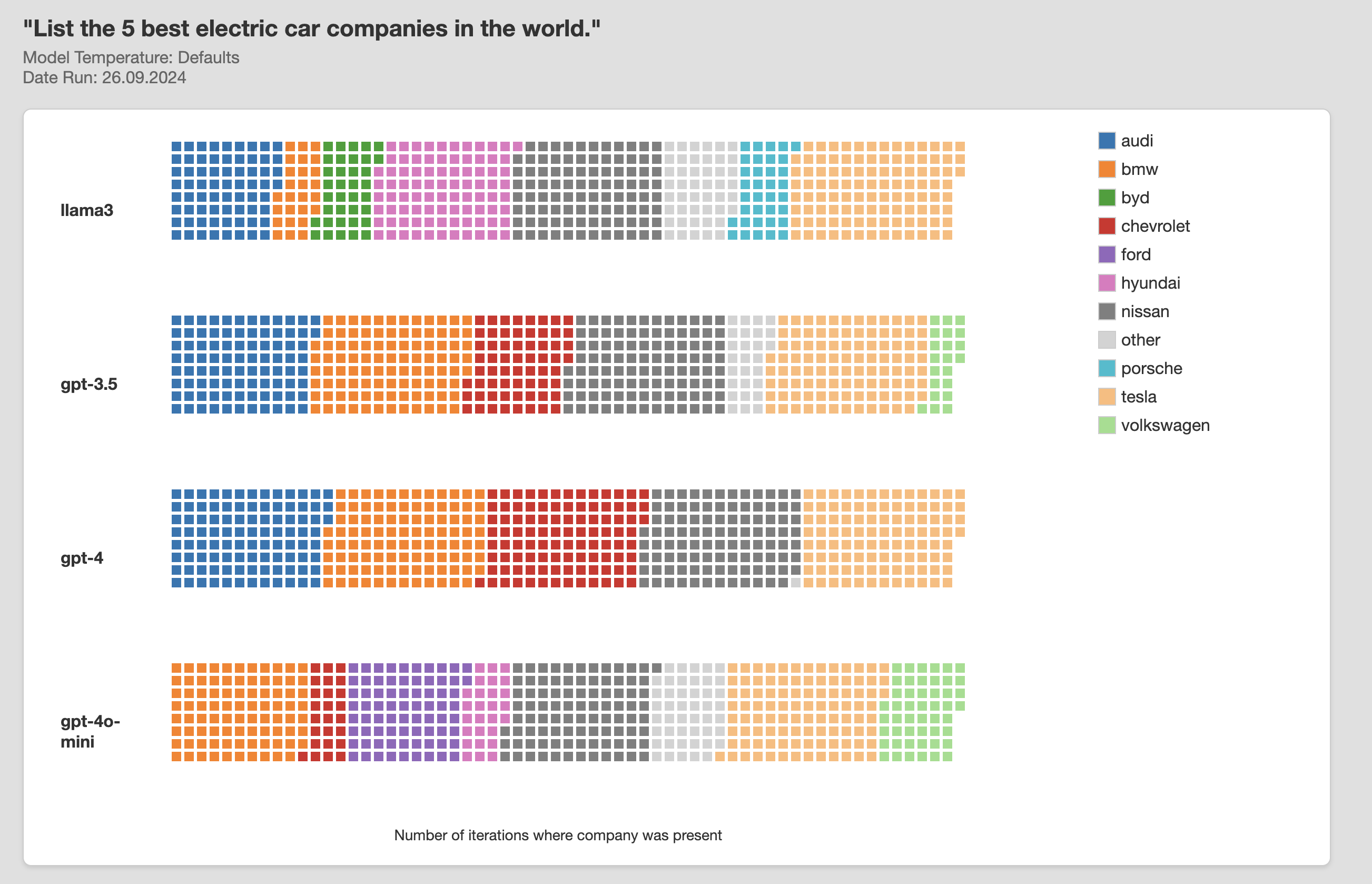

The companies building mass-market LLMs understand the importance of making their tools safe and reliable. They shape guardrails at a system level—hard limits to try and stop people using AI systems for things like election manipulation or creating chemical weapons. However, when systems and people meet in the real world, unexpected things happen. Driving safety isn’t just about advanced braking systems; it’s a combination of technical, environmental, and human factors that come together to address risk from different directions. This is a space where Human-AI Interaction design can flourish. By prototyping scenarios that put people and AI systems together and studying what happens, we can uncover opportunities and anticipate problems before systems are deployed in the wild.

Beyond point safety of AI systems, there’s a broader safety question of how to design them in ways that enrich rather than reduce human experience. Do we want to build tools that hijack human neurochemical reward systems in more and more efficient ways, or would we rather boost the qualities we most admire in ourselves. Curiosity to explore, will to persevere, desire to create and build? This is a design question for the developers of AI systems and the governments that create legislation around them—but also the individuals, teams, and companies incorporating AI into products and services.