For a few years now I’ve run research projects using LLM-based prototypes to study what happens when people and AI agents work together to solve problems. This meant hundreds of hours spent interacting with LLM’s in unusual ways and observing others doing the same. Here are three practices I picked up along the way and find helpful in my own use of LLM’s and design of AI agents:

Anthropomorphization

You can talk to an LLM like a “thing” or like a “person” – and there’s a difference. Humans have a ‘social brain’. A system of biological factors that evolved to aid our interactions with other people. It’s this system that leads us to engage in all kinds of social rituals – greetings, goodbyes, politeness, concern. The ways we feel and think when interacting change depending on the quality of relationship we have with someone we’re talking to. I’ve found this carries over to our interactions with LLM’s too. In certain contexts like creative collaboration, suspending disbelief and talking to an LLM in the way one might a friend or confidante can change how an interaction flows and where it leads. It doesn’t really matter to our brains whether or not an LLM thinks like us – its just important that it can mimic the ways we interact in sufficient measure to trigger our social responses.

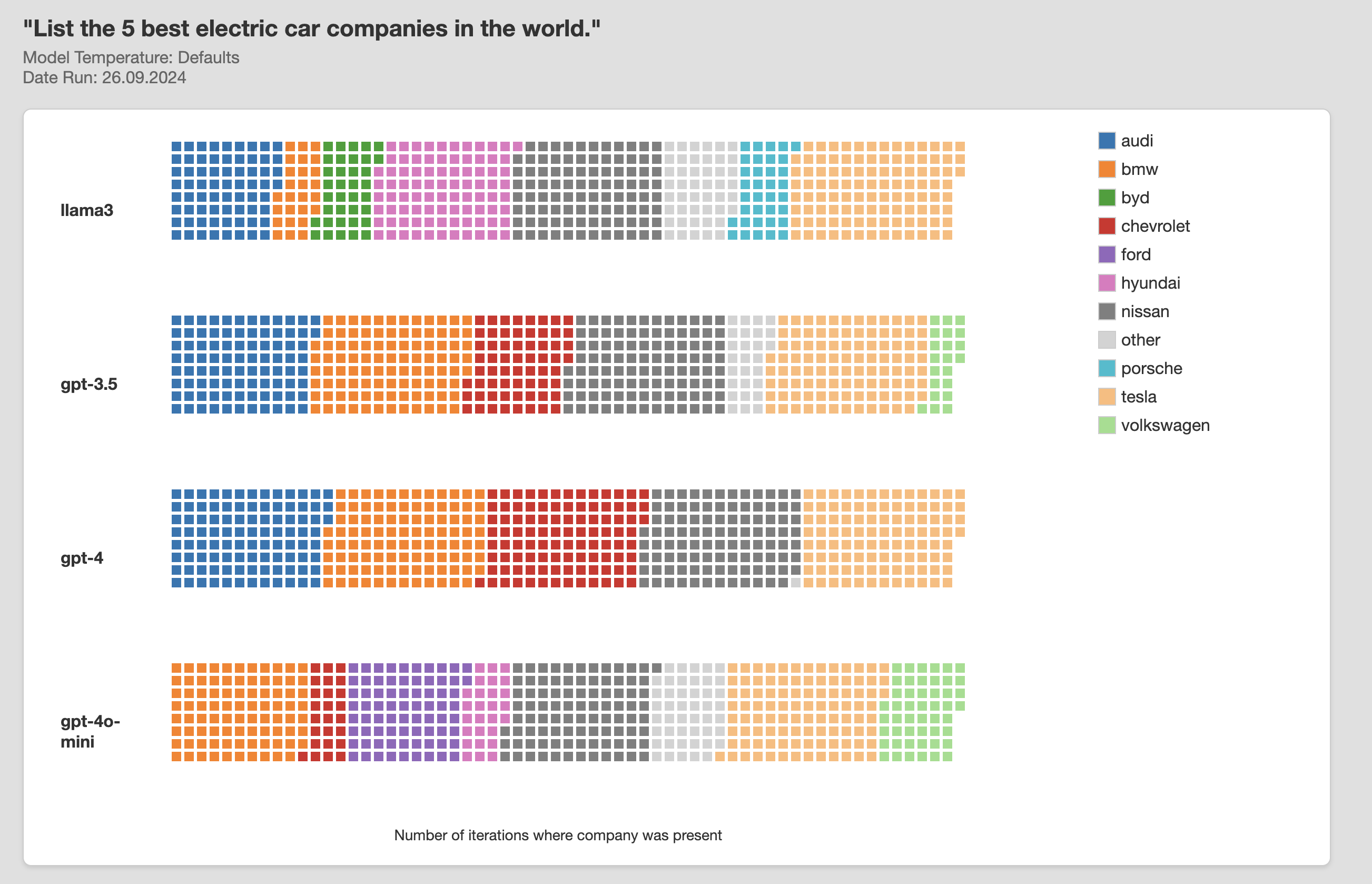

Accepting Fallibility

People have a trust bias when it comes to AI systems. We’re more ready to accept human fallibility when driving for example – than we are accidents caused by self driving cars. This bias carries over to interactions with LLM’s too. I’ve puzzled over why factual errors and inconsistencies they produce seem to so disproportionately dominate our attention. We already rely heavily on our own minds and the minds of others – systems highly prone to error and hallucination. We use judgment and context to navigate who and when to trust and for the majority of interactions we have, “good enough” reliability is fine. I’ve observed that extending the same tolerance of fallibility to LLM’s that we do to other people can help us find greater utility in them.

Deliberate Experimentation

In one of the studies I worked on, participants were asked to spend a a few days pretending that an LLM Agent was a remote team member they were collaborating with. This meant sharing information back and forth, assigning tasks to one another and keeping record of progress. One of the unexpected side effects of being forced to work with an LLM was that people started discovering cognitive tasks they could outsource to their ‘partner’ that they hadn’t previously thought about. The transactional costs of accessing cognitive abilities from other people has historically been really high. As the cost of intelligence drops. the equation changes. It can take deliberate experimentation to break the habit of relying on our own minds to solve problems for us.