Recently I was part of team doing experimental research around AI systems and social interaction – and specifically the concept of ‘Social Presence’. Social Presence is the degree to which people experience others as real and engaging in mediated communication environments *. While the term is often used in VR studies, it’s also increasingly applied to how humans experience interactions with AI systems. Systems that are increasingly capable of simulating the experience of talking to a real person. The biological state changes associated with social presence can impact our cognitive and decision making processes, stress levels, learning and motivation – qualities that are important in contexts of work collaboration. To provide an experience of social presence, an AI model doesn’t need to convince a person it is human – it just needs to interact in ways that trigger the human brain’s social responses.

“There’s something magical in the realm of building social experiences around

the feeling of human presence and being there with another person and this physical perception where we’re very physical beings” Mark Zuckerberg, Acquired Podcast, September.2024

An Experiment

20 research participants were given a text-based chat window and instruction to converse with an unknown partner for 10 minutes. The control group used a chat window with a human being on the other end. The experiment group spoke with an LLM-based agent instructed to interact as realistically as possible in the persona of a human being. Neither group was told who or what they would be interacting with. At the end of their conversation, each participant completed an adapted version of the “Networked Minds Social Presence Inventory” – a tool used by researchers to assess perception of social presence. Test questions include:

- Did the person seem to understand or empathise with your emotions?

- Was there a sense of shared experience or mutual understanding between you and the person?

- Did you trust the person’s responses and intentions?

Results and Implications

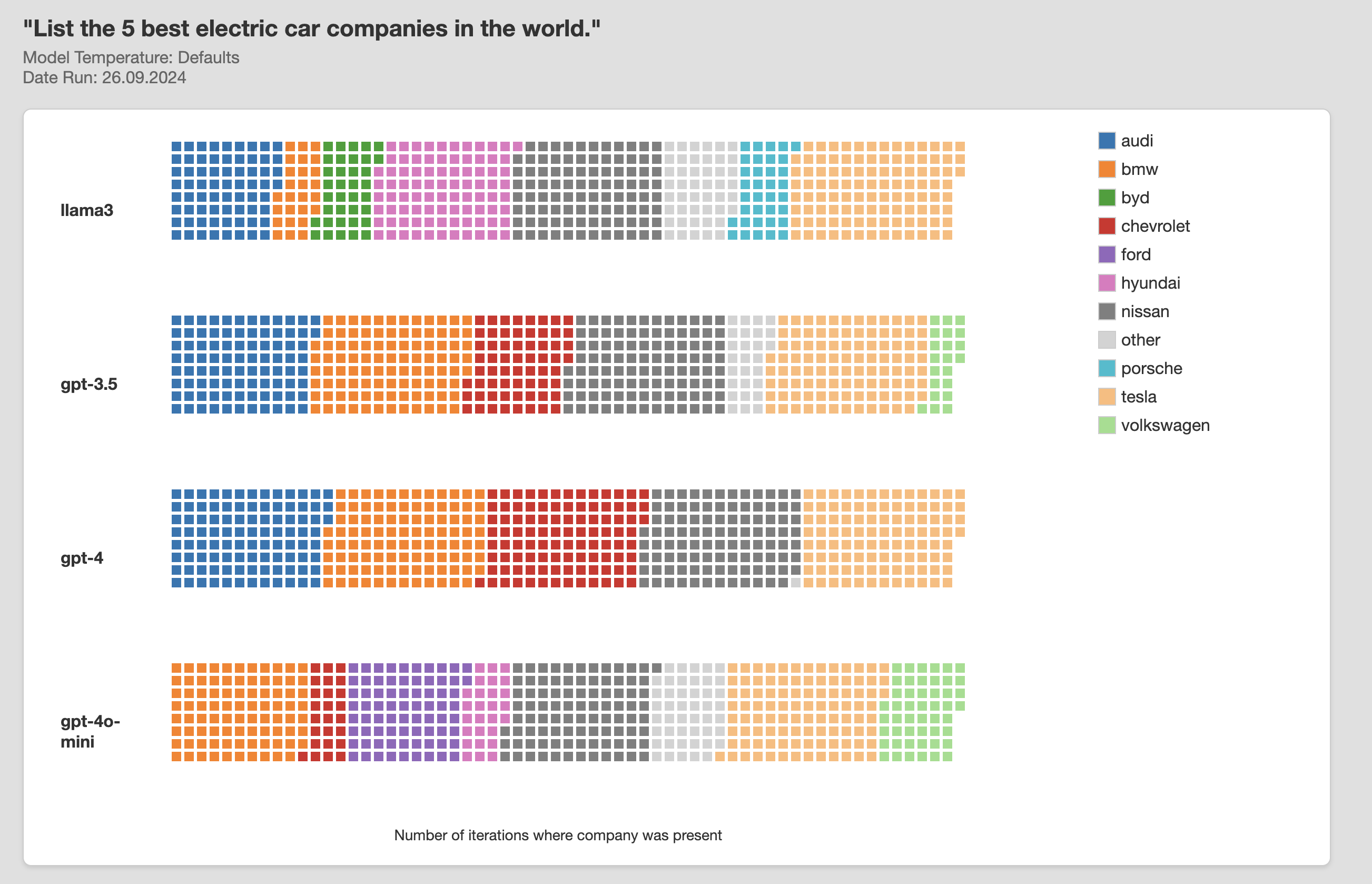

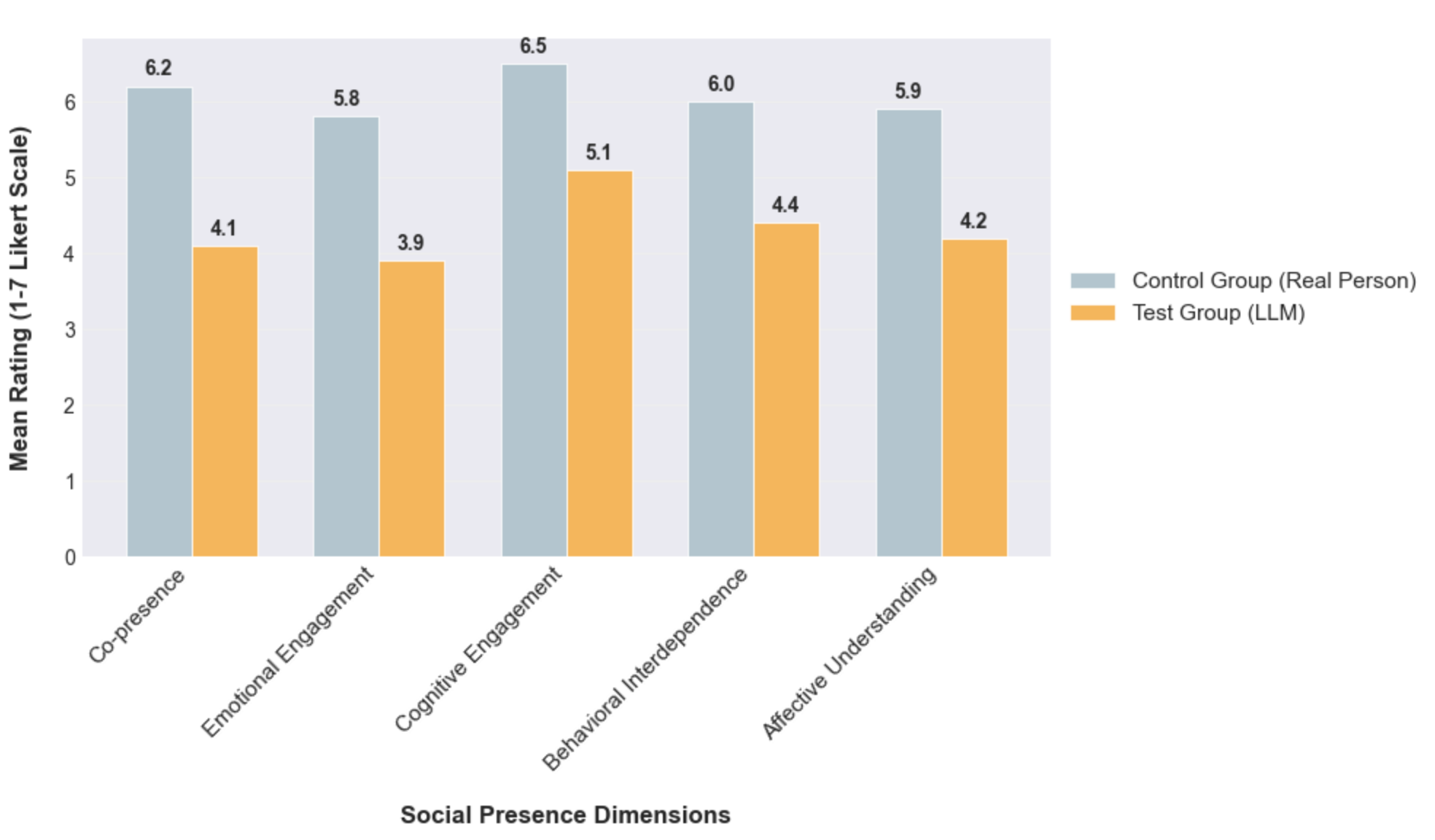

The experiment group that spoke to the LLM based agent reported experiences of social presence across all 5 related factors – though in lesser degree than the control group that interacted with a person.

The experiment was small-sample, used a not wholly reliable elf-reporting measure and wasn’t fully blind – subjects might have guessed they were interacting with an LLM and inferred the nature of the experiment. However it demonstrates how science-based user research can be used in the design and development of AI based products and services.

* (Biocca and Harms, 2002)